READING PASSAGE 1

The Flavour of Pleasure

No matter how much we talk about tasting our favorite flavors, relishing them really depends on a combined input from our senses that we experience through mouth, tongue and nose. The taste, texture, and feel of food are what we tend to focus on, but most important are the slight puffs of air as we chew our food – what scientists call ‘retronasal smell’.

Certainly, our mouths and tongues have taste buds, which are receptors for the five basic flavors: sweet, salty, sour, bitter, and umami, or what is more commonly referred to as savory. But our tongues are inaccurate instruments as far as flavor is concerned. They evolved to recognise only a few basic tastes in order to quickly identify toxins, which in nature are often quite bitter or acidly sour.

All the complexity, nuance, and pleasure of flavor come from the sense of smell operating in the back of the nose. It is there that a kind of alchemy occurs when we breathe up and out the passing whiffs of our chewed food. Unlike a hound’s skull with its extra long nose, which evolved specifically to detect external smells, our noses have evolved to detect internal scents. Primates specialise in savoring the many millions of flavor combinations that they can create for their mouths.

Taste without retronasal smell is not much help in recognising flavor. Smell has been the most poorly understood of our senses, and only recently has neuroscience, led by Yale University’s Gordon Shepherd, begun to shed light on its workings. Shepherd has come up with the term ‘neurogastronomy’ to link the disciplines of food science, neurology, psychology, and anthropology with the savory elements of eating, one of the most enjoyed of human experiences.

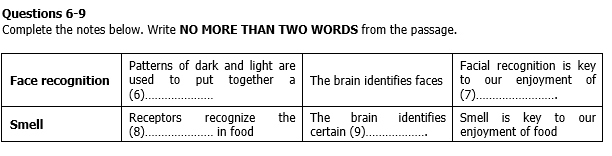

In many ways, he is discovering that smell is rather like face recognition. The visual system detects patterns of light and dark and, building on experience, the brain creates a spatial map. It uses this to interpret the interrelationship of the patterns and draw conclusions that allow us to identify people and places. In the same way, we use patterns and ratios to detect both new and familiar flavors. As we eat, specialised receptors in the back of the nose detect the air molecules in our meals. From signals sent by the receptors, the brain understands smells as complex spatial patterns. Using these, as well as input from the other senses, it constructs the idea of specific flavors.

This ability to appreciate specific aromas turns out to be central to the pleasure we get from food, much as our ability to recognise individuals is central to the pleasures of social life. The process is so embedded in our brains that our sense of smell is critical to our enjoyment of life at large. Recent studies show that people who lose the ability to smell become socially insecure, and their overall level of happiness plummets.

Working out the role of smell in flavor interests food scientists, psychologists, and cooks alike. The relatively new discipline of molecular gastronomy, especially, relies on understanding the mechanics of aroma to manipulate flavor for maximum impact. In this discipline, chefs use their knowledge of the chemical changes that take place during cooking to produce eating pleasures that go beyond the ‘ordinary’.

However, whereas molecular gastronomy is concerned primarily with the food or ‘smell’ molecules, neurogastronomy is more focused on the receptor molecules and the brain’s spatial images for smell. Smell stimuli form what Shepherd terms ‘odor objects’, stored as memories, and these have a direct link with our emotions. The brain creates images of unfamiliar smells by relating them to other more familiar smells. Go back in history and this was part of our survival repertoire; like most animals, we drew on our sense of smell, when visual information was scarce, to single out prey.

Thus the brain’s flavor-recognition system is a highly complex perceptual mechanism that puts all five senses to work in various combinations. Visual and sound cues contribute, such as crunching, as does touch, including the texture and feel of food on our lips and in our mouths. Then there are the taste receptors, and finally, the smell, activated when we inhale. The engagement of our emotions can be readily illustrated when we picture some of the wide- ranging facial expressions that are elicited by various foods – many of them hard-wired into our brains at birth. Consider the response to the sharpness of a lemon and compare that with the face that is welcoming the smooth wonder of chocolate.

The flavor-sensing system, ever receptive to new combinations, helps to keep our brains active and flexible. It also has the power to shape our desires and ultimately our bodies. On the horizon we have the positive application of neurogastronomy: manipulating flavor to curb our appetites.

Questions 1-5

Complete the notes below. Write NO MORE THAN TWO WORDS from the passage.

1. According to scientists, the term………………………….characterises the most critical factor in appreciating flavour.

2. ‘Savoury’ is a better-known word for………………………

3. The tongue was originally developed to recognise the unpleasant taste of……………………..

4. Human nasal cavities recognize……………………………much better than external ones.

5. Gordon Shepherd uses the word ‘neurogastronomy’ to draw together a number of…………………………related to the enjoyment of eating.

Questions 10-13

Answer the questions below. Choose NO MORE THAN ONE WORD from the text for each answer.

10. In what form does the brain store ‘odor objects’?

11. When seeing was difficult, what did we use our sense of smell to find?

12. Which food item illustrates how flavour and positive emotion are linked?

13. What could be controlled in the future through flavour manipulation?

READING PASSAGE 2

Dawn of the robots

A At first sight it looked like a typical suburban road accident. A Land Rover approached a Chevy Tahoe estate car that had stopped at a kerb; the Land Rover pulled out and tried to pass the Tahoe just as it started off again. There was a crack of fenders and the sound of paintwork being scraped, the kind of minor mishap that occurs on roads thousands of times every day. Normally drivers get out, gesticulate, exchange insurance details and then drive off. But not on this occasion. No one got out of the cars for the simple reason that they had no humans inside them; the Tahoe and Land Rover were being controlled by computers competing in November’s DARPA (the U.S. Defence Advanced Research Projects Agency) Urban Challenge.

B The idea that machines could perform to such standards is startling. Driving is a complex task that takes humans a long time to perfect. Yet here, each car had its on-board computer loaded with a digital map and route plans, and was instructed to negotiate busy roads; differentiate between pedestrians and stationary objects; determine whether other vehicles were parked or moving off; and handle various parking manoeuvres, which robots turn out to be unexpectedly adept at. Even more striking was the fact that the collision between the robot Land Rover, built by researchers at the Massachusetts Institute of Technology, and the Tahoe, fitted out by Cornell University Artificial Intelligence (AI) experts, was the only scrape in the entire competition. Yet only three years earlier, at DARPA’s previous driverless car race, every robot competitor – directed to navigate across a stretch of open desert – either crashed or seized up before getting near the finishing line.

C It is a remarkable transition that has clear implications for the car of the future. More importantly, it demonstrates how robotics sciences and Artificial Intelligence have progressed in the past few years – a point stressed by Bill Gates, the Microsoft boss who is a convert to these causes. ‘The robotics industry is developing in much the same way the computer business did 30 years ago,’ he argues. As he points out, electronics companies make toys that mimic pets and children with increasing sophistication. ‘I can envision a future in which robotic devices will become a nearly ubiquitous part of our day-to-day lives,’ says Gates. ‘We may be on the verge of a new era, when the PC will get up off the desktop and allow us to see, hear, touch and manipulate objects in places where we are not physically present.’

D What is the potential for robots and computers in the near future? The fact is we still have a way to go before real robots catch up with their science fiction counterparts/ Gates says. So what are the stumbling blocks? One key difficulty is getting robots to know their place. This has nothing to do with class or etiquette, but concerns the simple issue of positioning. Humans orient themselves with other objects in a room very easily. Robots find the task almost impossible. ‘Even something as simple as telling the difference between an open door and a window can be tricky for a robot,’ says Gates. This has, until recently, reduced robots to fairly static and cumbersome roles.

E For a long time, researchers tried to get round the problem by attempting to re-create the visual processing that goes on in the human cortex. However, that challenge has proved to be singularly exacting and complex. So scientists have turned to simpler alternatives: ‘We have become far more pragmatic in our work,’ says Nello Cristianini, Professor of Artificial Intelligence at the University of Bristol in England and associate editor of the Journal of Artificial Intelligence Research. ‘We are no longer trying to re-create human functions. Instead, we are looking for simpler solutions with basic electronic sensors, for example.’ This approach is exemplified by vacuuming robots such as the Electrolux Trilobite. The Trilobite scuttles around homes emitting ultrasound signals to create maps of rooms, which are remembered for future cleaning. Technology like this is now changing the face of robotics, says philosopher Ron Chrisley, director of the Centre for Research in Cognitive Science at the University of Sussex in England.

F Last year, a new Hong Kong restaurant, Robot Kitchen, opened with a couple of sensor-laden humanoid machines directing customers to their seats. Each possesses a touch-screen on which orders can be keyed in. The robot then returns with the correct dishes. In Japan, University of Tokyo researchers recently unveiled a kitchen ‘android’ that could wash dishes, pour tea and make a few limited meals. The ultimate aim is to provide robot home helpers for the sick and the elderly, a key concern in a country like Japan where 22 per cent of the population is 65 or older. Over US$1 billion a year is spent on research into robots that will be able to care for the elderly. ‘Robots first learn basic competence – how to move around a house without bumping into things. Then we can think about teaching them how to interact with humans,’ Chrisley said. Machines such as these take researchers into the field of socialised robotics: how to make robots act in a way that does not scare or offend individuals. ‘We need to study how robots should approach people, how they should appear. That is going to be a key area for future research,’ adds Chrisley.

Questions 14-19

The text on the following pages has six paragraphs, A-F. Choose the correct heading for each paragraph from the list of headings (i-ix) below.

List of headings

i Tackling the issue using a different approach

ii A significant improvement on last time

iii How robots can save human lives

iv Examples of robots at work

v Not what it seemed to be

vi Why timescales are impossible to predict

vii The reason why robots rarely move

viii Following the pattern of an earlier development

ix The ethical issues of robotics

14 Paragraph A

15 Paragraph B

16 Paragraph C

17 Paragraph D

18 Paragraph E

19 Paragraph F

Questions 20-23

Look at the following statements (Questions 20-23) and the list of people below.

Match each statement with the correct person, A, B or C.

NB You may use any letter more than once.

A Bill Gates

B Nello Cristianini

C Ron Chrisley

20. An important concern for scientists is to ensure that robots do not seem frightening.

21. We have stopped trying to enable robots to perceive objects as humans do.

22. It will take considerable time for modern robots to match the ones we have created in films and books.

23. We need to enable robots to move freely before we think about trying to communicate with them.

Questions 24-26

Complete the notes below. Write NO MORE THAN THREE WORDS from the passage.

Robot features

DARPA race cars: (24)…………………………provides maps and plans for route

Electrolux trilobite: builds an image of a room by sending out (25)………………………..

Robot kitchen humanoids: have a (26)……………………………..to take orders

READING PASSAGE 3

It’s your choice – or is it really?

We are constantly required to process a wide range of information to make decisions. Sometimes, these decisions are trivial, such as what marmalade to buy. At other times, the stakes are higher, such as deciding which symptoms to report to the doctor. However, the fact that we are accustomed to processing large amounts of information does not mean that we are better at it (Chabris & Simons, 2009). Our sensory and cognitive systems have systematic ways of failing of which we are often, perhaps blissfully, unaware.

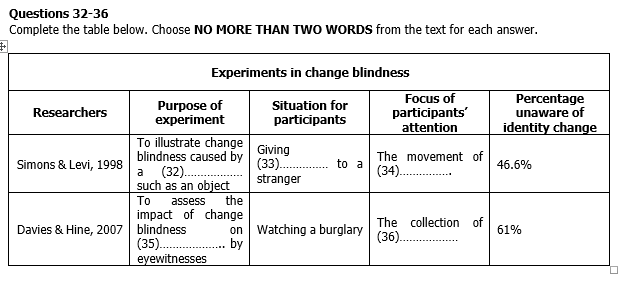

Imagine that you are taking a walk in your local city park when a tourist approaches you asking for directions. During the conversation, two men carrying a door pass between the two of you. If the person asking for directions had changed places with one of the people carrying the door, would you notice? Research suggests that you might not. Harvard psychologists Simons and Levi (1998) conducted a field study using this exact set-up and found that the change in identity went unnoticed by 7 (46.6%) of the 15 participants. This phenomenon has been termed ‘change blindness’and refers to the difficulty that observers have in noticing changes to visual scenes (e.g. the person swap), when the changes are accompanied by some other visual disturbance (e.g. the passing of the door).

Over the past decade, the change blindness phenomenon has been replicated many times. Especially noteworthy is an experiment by Davies and Hine (2007) who studied whether change blindness affects eyewitness identification. Specifically, participants were presented with a video enactment of a burglary. In the video, a man entered a house, walking through the different rooms and putting valuables into a knapsack. However, the identity of the burglar changed after the first half of the film while the initial burglar was out of sight. Out of the 80 participants, 49 (61%) did not notice the change of the burglar’s identity, suggesting that change blindness may have serious implications for criminal proceedings.

To most of us, it seems bizarre that people could miss such obvious changes while they are paying active attention. However, to catch those changes, attention must be targeted to the changing feature. In the study described above, participants were likely not to have been expecting the change to happen, and so their attention may have been focused on the valuables the burglar was stealing, rather than the burglar.

Drawing from change blindness research, scientists have come to the conclusion that we perceive the world in much less detail than previously thought (Johansson, Hall, & Sikstrom, 2008). Rather than monitoring all of the visual details that surround us, we seem to focus our attention only on those features that are currently meaningful or important, ignoring those that are irrelevant to our current needs and goals.Thus at any given time, our representation of the world surrounding us is crude and incomplete, making it possible for changes or manipulations to go undetected (Chabris & Simons, 2010).

Given the difficulty people have in noticing changes to visual stimuli, one may wonder what would happen if these changes concerned the decisions people make. To examine choice blindness, Hall and colleagues (2010) invited supermarket customers to sample two different kinds of jams and teas. After participants had tasted or smelled both samples, they indicated which one they preferred. Subsequently, they were purportedly given another sample of their preferred choice. On half of the trials, however, these were samples of the non-chosen jam or tea. As expected, only about one-third of the participants detected this manipulation. Based on these findings, Hall and colleagues proposed that choice blindness is a phenomenon that occurs not only for choices involving visual material, but also for choices involving gustatory and olfactory information.

Recently, the phenomenon has also been replicated for choices involving auditory stimuli (Sauerland, Sagana, & Otgaar, 2012). Specifically, participants had to listen to three pairs of voices and decide for each pair which voice they found more sympathetic or more criminal. The voice was then presented again; however, the outcome was manipulated for the second voice pair and participants were presented with the non-chosen voice. Replicating the findings by Hall and colleagues, only 29% of the participants detected this change.

Merckelbach, Jelicic, and Pieters (2011) investigated choice blindness for intensity ratings of one’s own psychological symptoms. Their participants had to rate the frequency with which they experienced 90 common symptoms (e.g. anxiety, lack of concentration, stress, headaches etc.) on a 5-point scale. Prior to a follow-up interview, the researchers inflated ratings for two symptoms by two points. For example, when participants had rated their feelings of shyness, as 2 (i.e. occasionally), it was changed to 4 (i.e. all the time). This time, more than half (57%) of the 28 participants were blind to the symptom rating escalation and accepted it as their own symptom intensity rating.This demonstrates that blindness is not limited to recent preference selections, but can also occur for intensity and frequency.

Together, these studies suggest that choice blindness can occur in a wide variety of situations and can have serious implications for medical and judicial outcomes. Future research is needed to determine how, in those situations, choice blindness can be avoided.

Questions 27-31

Do the following statements agree with the claims of the writer in the text?

YES if the statement agrees with the claims of the writer

NO if the statement contradicts the claims of the writer

NOT GIVEN if it is impossible to say what the writer thinks about this

27. Doctors make decisions according to the symptoms that a patient describes.

28. Our ability to deal with a lot of input material has improved over time.

29. We tend to know when we have made an error of judgement.

30. A legal trial could be significantly affected by change blindness.

31. Scientists have concluded that we try to take in as much detail as possible from our surroundings.

Questions 37 and 38

Choose TWO letters, A-E.

Which TWO statements are true for both the supermarket and voice experiments?

A. The researchers focused on non-visual material.

B. The participants were asked to explain their preferences.

C. Some of the choices made by participants were altered.

D. The participants were influenced by each other’s choices.

E. Percentage results were surprisingly low.

Questions 39 and 40

Choose TWO letters, A-E

Which TWO statements are true for the psychology experiment conducted by Merckelbach, Jelicic, and Pieters?

A. The participants had to select their two most common symptoms.

B. The participants gave each symptom a 1-5 rating.

C. Shyness proved to be the most highly rated symptom.

D. The participants changed their minds about some of their ratings.

E. The researchers focused on the strength and regularity of symptoms.

ANSWER

1. (retronasal) smell

2. Umami

3. toxins

4. internal scents/ smells

5. disciplines

6. spatial map

7. social life

8. (air) molecules

9. flavors

10. memories

11. prey

12. chocolate

13. appetites

14. v

15. ii

16. viii

17. vii

18. i

19. iv

20. C

21. B

22. A

23. C

24. onboard computer

25. ultrasound signals

26. touchscreen

27. not given

28. no

29. no

30. yes

31. no

32. visual disturbance

33. directions

34. the door

35. identification

36. valuables

37. A

38. C

39. B

40. E